Modern medical ethics: Critical issue for 21th century | The News Tribe Blogs

“If we believe men have any personal

rights at all as human beings, they have an absolute right to such a measure of

good health as society, and society alone is able to give them” – Aristotle

Health systems as defined by World

Health Organization (WHO) “all the activities whose primary purpose is to

promote, restore or maintain health”. When we move into the 21st

century, the promotion and protection of human rights is gaining greater

momentum. The WHO constitution 1946 stated “The

enjoyment of the highest standard of health is one of the fundamental rights of

every human being without distinction of race, religion, political belief,

economic or social condition”

Patients, families and healthcare

professionals occasionally face complicated decisions about medical treatments.

These decisions may clash with a patient and/or family morals, religious

beliefs or healthcare plan. In this risky situation medical ethics is not only considerate

review of how to act in the best interest of patients and their family but also

about making good choices based on beliefs and values regarding life, health, and

suffering. In the past, only a few individual physicians devoted themselves to

medical ethics. Beginning in the second half of twentieth century, the field

undergoes explosive expansion and experts from numerous disciplines entered in

medical ethics. The swift advances in medical diagnosis and treatment and the

introduction of new technologies have created numerous new ethical problems,

resulting in the maturation of medical ethics as a specialty in its own right.

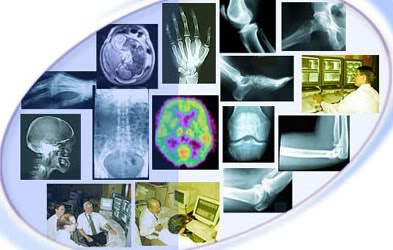

Enormous development has been achieved

in the medical field during the last few decades and more is projected in the

following decades. Advances in diagnostic imaging and biological testing

techniques as well as in medical forecasting based on genetic testing are ongoing.

Advances in surgical and medical cures, organ and tissue transplantation,

artificial organs, cloning, tissue culture techniques, molecular biology and

information technology are reported almost daily. “Modern Medical Ethics” is

based on concept derived from various disciplines, including the biomedical

sciences, the behavioral sciences, philosophy, religion and law. Modern medical

ethics is essentially a form of applied ethics, which seeks to clarify ethical

questions that characterize the practice of medicine and to justify and weigh

the various practical options and considerations. Thus medical ethics is the

application general ethical principles to ethical issues. The application of

such an ethic is not specific to medicine but also relates to economy, law,

journalism, and their like.

Medical ethics is now not only part of

the curriculum in institutes of the health professions in developed countries

but also research institutes of medical ethics have been established at all

levels. In developed nations the medical literature has proliferated, with

numerous books and journals devoted entirely to the subject. In such countries

common citizen is also vitally interested in this subject, and public lectures,

newspaper articles, legal discussions and legislation on medical ethical issues

are frequent. Within Canada, EU, United States, and somehow gulf countries,

Modern Medical Ethics has emerged as a new professional. The individuals normally

have specialized in one or more the fields of philosophy, ethics, law, religion

and medicine, and serve as advisor in hospitals to physicians, patients and

their families. They also effort to resolve difficult ethical questions posed

to them by the medical team or by patients and their families.